Buried by Bots: How AI is Maxing Out Your Cognitive Bandwidth

Why Your AI Assistant Might Be Making You More Overwhelmed

Hi and welcome to today’s issue!

If you’ve been following along, you will know that at Work3 we try to disect trends, look at the big picture, but also take different perspectives and do thought experiments. Today, I’d like to talk about the hidden ‘con’ in the whole AI productivity craze: Cognitive Load.

Let’s go!

WTH is Cognitive Overload?

Feeling swamped? Buried in emails? Reports? Too much information? It needs your attention. Right now.

AI is meant to be a super helper. It promises to make things easy. Do the boring stuff. Let you think about what really matters.

Is that true, though? Does it always feel easier? Or is it just… different work?

First, we need to understand what Cognitive Load is.

Think of it as your brain's working memory, its active RAM. It's the mental effort required to learn something new, solve a problem, or simply process the information hitting you right now. Like computer RAM, it's limited. When overloaded, our focus frays, decision-making suffers, mistakes creep in, and burnout looms. The way tasks are structured, information is presented, and tools are designed drastically impacts this load.

Nobody talks about it and even if it’s hard to measure, it can be a real problem:

Performance Decline: High cognitive workload reduces task efficiency. For example, multitasking during typing led to a 23% decline in performance.

Physiological Effects: Increased cognitive demands result in elevated heart rates and skin conductivity levels. Nonlinear relationships exist between these physiological responses and cognitive performance under high workload conditions.

Fatigue Correlation: Mental workload scores are significantly correlated with occupational fatigue (e.g., frustration and mental demand dimensions).

As with all systems (including AI) we should aim for a balance. But who’s optimizing or measuring for this? Right now, no-one.

Workers are increasingly burdened by managing multiple devices and apps. About 42% of employees who use more than 10 apps take 15 minutes or longer to refocus after switching tasks.

Notifications and context-switching across devices contribute to rising mental strain. One-quarter of surveyed professionals receive more than 30 notifications per day, adding to their workload

Nearly 45% of tech workers reported spending only four hours or fewer per day on focused work

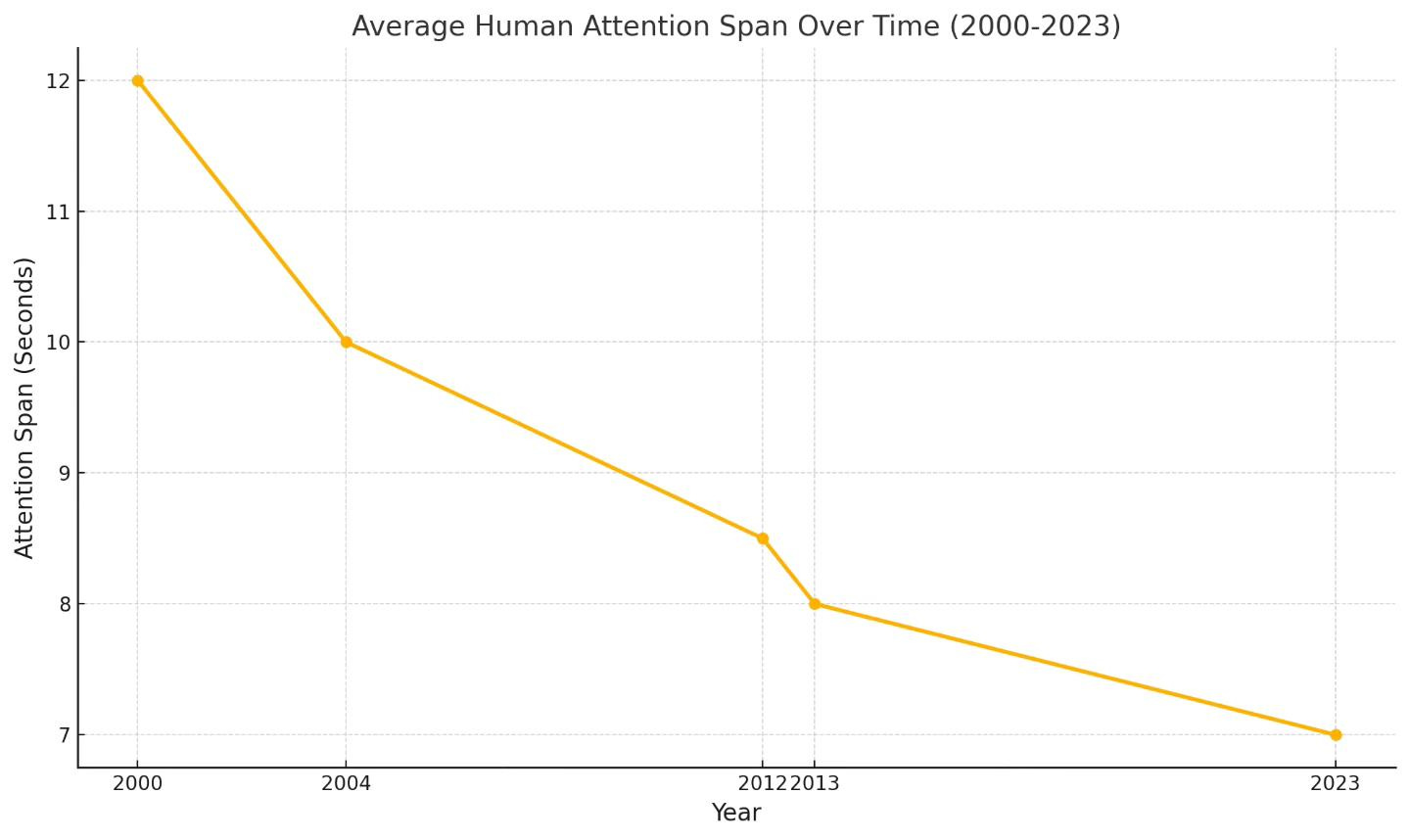

Of course, cognitive load goes hand in hand with attention spans. When your brain's overloaded, focus goes out the window - and considering focus (or attention) has been going down the drain with the rise of notifications, social media, and digital dopamine addiction - we’re in for a toxic cocktail.

Why AI will make things worse

It’s true that for many things, AI can help reduce the burden of manual tasks, beginning with data entry and going up to market research and more.

However, that mostly reduces time spent on the task, which is different from cognitive workload.

If the end goal of the technology, especially at companies, but likely also at an individual level, is simply to get more done, faster, and maybe cheaper, then we risk overlooking a critical hidden cost: the increase in our own cognitive load.

Here’s a look at the specific ways AI can actually make our heads hurt more, not less:

The Continuous Oversight Demand: As current AI often struggles with full task autonomy and handling novel situations reliably, users are required not just to check final outputs, but to provide ongoing monitoring and intervention. This effectively casts the user in a continuous supervisory or "babysitting" role, adding a persistent layer of cognitive management.

The Prompting Puzzle: Getting the right output from AI often requires careful, iterative prompt engineering. Crafting, testing, and refining prompts is a cognitive task in itself, sometimes feeling more laborious than the original task.

The Verification Burden: We can't blindly trust AI. Hallucinations, biases, and inaccuracies mean we must critically evaluate its output. This constant vigilance and fact-checking adds a significant layer of mental effort and responsibility.

The Failure Diagnosis Overhead: When AI encounters genuinely novel aspects of a task where its pattern-matching fails, the user bears the cognitive load of not just recognizing the failure, but diagnosing why it occurred (e.g., poor prompt, task too novel) and then devising a workaround or completing the task manually. This adds a complex problem-solving layer beyond simple verification.

The Mental Model Overhead: To use AI effectively, we often need a basic understanding of how it works, its limitations, and its potential pitfalls. Building and maintaining this mental model requires ongoing cognitive effort.

Information Overload 2.0: AI can generate vast amounts of text, code, or ideas incredibly quickly. Instead of simplifying, this can sometimes lead to more information to sift through and evaluate, increasing decision fatigue.

The Redundancy Burden: AI can present information inefficiently, creating unnecessary cognitive work. This includes generating summaries that users feel compelled to verify against the original source due to lack of trust, or presenting the same core data in multiple formats (text, lists, charts), forcing redundant mental processing.

Tool Sprawl & Learning Curves: The AI landscape is exploding. Learning to use multiple AI tools, understanding their quirks, and integrating them into workflows demands time and cognitive energy.

Last, but not least - as predicted in some past articles on Work3, we will see three more factors at play. The rise of side-hustles, an increase in ‘overemployment’ (employees running several jobs at the same time) and the widening of skills and multiple ‘roles’ that we will be running at the same time.

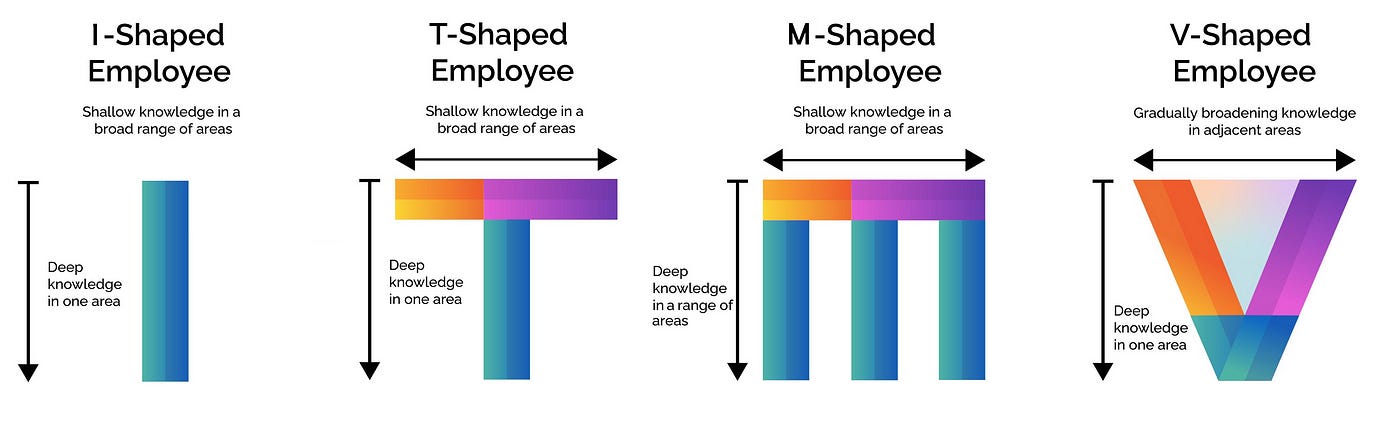

AI will help transition from T-shaped employees to M-shaped employees that have deep expertise in multiple core areas, along with the broad collaborative ability needed to connect and work across these and other domains.

This will significantly increase cognitive workload:

Intense Learning & Upkeep Demand: Mastering multiple complex domains requires a huge initial learning investment and continuous effort to stay current. Even with AI accelerators, absorbing, integrating, and maintaining deep knowledge across several distinct fields is inherently more cognitively demanding than focusing on one. The sheer volume of information to manage multiplies.

Heavy Context-Switching Costs: Constantly shifting your mental gears between the different frameworks, terminologies, and problem-solving approaches of multiple deep domains is mentally taxing. This frequent context switching is known to drain cognitive resources, reduce focus, and increase the likelihood of errors – directly adding to cognitive load.

The High Burden of Integration: The real power of the M-shape lies in connecting insights across disciplines. However, this act of synthesis – identifying links, resolving conflicts between domain knowledge, and applying concepts creatively – is itself a highly complex and demanding cognitive task requiring significant working memory and mental effort.

How do we get out of this vicious cycle?

By starting to measure. As with all complex problems, there won’t be a one-size-fits-all solution, and it will require a lot of iterations. Here’s a few ideas we can get started on:

Just Ask People (Subjective Ratings):

Simple Check-ins: Regularly ask employees simple questions like, "On a scale of 1-5, how mentally demanding was that task/your day?" or "How much mental effort did using this new tool require?"

Structured Surveys: Simplified versions of established questionnaires (like the NASA-TLX) that ask people to rate factors like mental demand, effort, frustration, and time pressure related to specific tasks or roles. It captures the perceived strain directly.

Watch Performance Metrics (Objective Output):

Look for Changes: Are error rates going up on detailed tasks? Is it taking longer to complete standard processes? Is the quality of work dipping during busy periods or after introducing complex tools? Tracking these KPIs, especially looking for patterns or changes correlated with demanding work, can indicate high cognitive load is taking a toll.

Task Switching Data: As mentioned earlier, tracking how often people switch between apps or tasks can be a proxy. Frequent, rapid switching often correlates with higher strain and difficulty focusing.

Observe Work Patterns (Behavioral Clues):

Managers and teams can look out for behavioral signs. Are people needing more breaks? Do they seem to struggle to concentrate? Are complex tasks being put off? Are mistakes becoming more common? These can be visible indicators that the mental load is becoming excessive.

Analyze Tool Interaction (Digital Footprints):

Where ethical and possible, analyzing how people interact with digital tools can offer clues. How long does it take to complete a workflow involving a new AI? How often are outputs corrected? How much time is spent navigating complex interfaces? This data can highlight specific points of friction contributing to load.

Advanced Methods (The High-Tech Options):

For deeper insights (though less common in typical workplaces), there are physiological measures. Think wearables tracking heart rate variability (HRV), eye-tracking systems monitoring pupil dilation or gaze patterns, or even EEG measuring brain activity. These offer objective data but usually require specialized equipment and expertise.

The Bottom Line: No single measure tells the whole story. Often, the best understanding comes from combining a few methods – like linking subjective feelings of overwhelm with objective performance data – and always considering the specific context of the job and the individual. The goal is to spot trends and identify where workflows or tools might be pushing cognitive limits too far.

As suggested on my article about Productivity vs Performance , all of this may need us to rethink the whole model and architecture of work (think compensation based on output, more).

Unless we start addressing the problem, we will end up with a powerful technology but incredible difficulty in maintaining focus and performing deep work alongside it, and ensuring it truly augments human capabilities rather than overwhelming them.

Ciao,

Matteo

This piece really nails it. It's wild how our AI helpers can sometimes add to the chaos instead of simplifying things. Makes you rethink how we use tech.

We’re not drowning in AI content because it’s too much. We’re drowning because it’s unstructured, unactionable, and untethered from any strategic filter.

This post captures something I’m seeing everywhere right now — from C-suites to product teams:

AI is maxing out our bandwidth not because it’s overwhelming, but because we’ve failed to build systems that distinguish signal from noise.

What’s changing isn’t just the volume of input — it’s the collapse of contextual guardrails:

• Teams are exposed to more data but have no shared lens to interpret it.

• Executives get faster dashboards, but fewer decisions with real conviction.

• Everyone’s talking “readiness,” but almost no one has mapped what they’re actually transforming into.

That’s the strategic gap.

At GoodMora, our newborn baby, we don’t start with “What can AI do for you?”

We start with:

“What structural pattern are you actually in — and what does success look like in that pattern?”

Because without that, AI becomes ambient chaos. More models, more dashboards, more meetings — zero change.

The real future of AI strategy isn’t content generation. It’s pattern recognition, structure mapping, and friction reduction at the org level.

And ironically, the more AI floods the system, the more valuable structure becomes.

Appreciate this post — we need more leadership voices calling out the strategic fog.