There's no (A)I in Team

Forget Superheroes. The Future Belongs to Teams with a High Team Quotient

Will the race for AI dominance be won by the company with the most superheroes, or the one with the best teams?

The answer reveals an uncomfortable truth at the heart of our obsession with ‘performance-culture.’ We are fascinated by ‘superheroes’—the 10x engineer, the founder who 'wills their vision into existence,' or the athlete who 'carries the team.' This hero-worship conveniently allows us to overlook the crucial, but less glamorous, reality that no one achieves greatness in a vacuum.

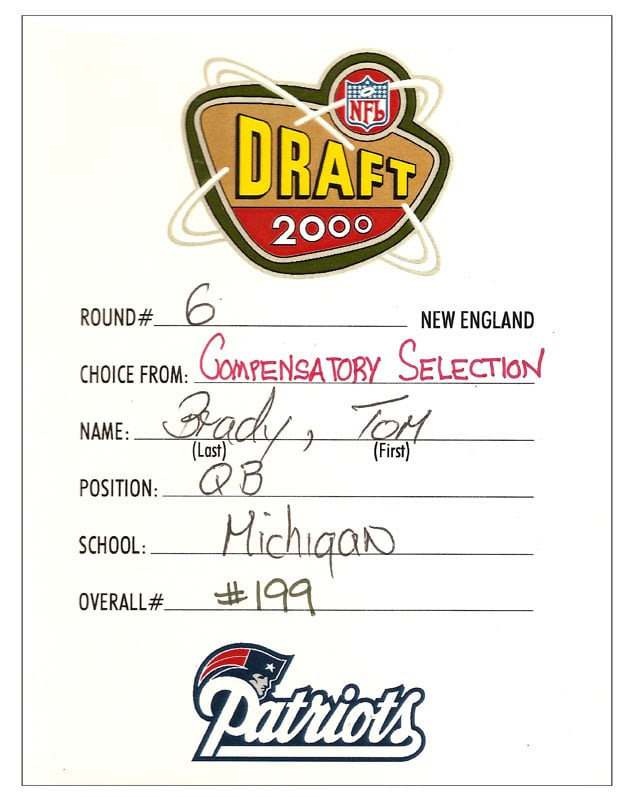

This isn't a new problem. It’s perfectly captured by an anecdote about one of history's greatest quarterbacks:

“Brady…was drafted 199th overall, behind six quarterbacks who were supposed to be the future of the league. Teams had all the data in the world, but they still got it wrong”. “*This is the great paradox of talent evaluation. Talent is mispriced because NFL teams focus on isolated individual performance rather than how players fit together as a team.”

This is the very paradox we've explored in pieces like The Death of the CV, and it highlights a timeless business tension: the perceived value of a superstar versus the actual value of a synchronized team. The most vivid expression of this is the infamous corporate dilemma of ‘firing high-performing assholes’—the necessary decision to remove a statistical superstar whose presence is a net negative for the team’s coordination and psychological safety.

This principle is powerfully articulated by Sangeet Choudary in his book ‘Reshuffle’. He argues that we often miss the point of new technologies by viewing them as individual tools. The real transformation, especially with AI, comes from its ability to enhance the entire ecosystem through its true superpower: coordination. As Choudary points out, an ecosystem creates more value when it moves in the most synchronized way.

This long-standing tension between the celebrated individual and the coordinated team is now being stress-tested and amplified by the single most important technological shift of our era: the adoption of AI.

The New Digital Divide is Inside Your Team

The first wave of generative AI adoption has been a bottom-up, chaotic, and profoundly individualistic revolution. It’s a “bring your own AI” culture (according to Microsft study, 78% of AI users are bringing their own AI tools to work). On any given team today, you have an "AI power user" who is often operating on a different plane of existence. As we've discussed in The AI Mirror, how individuals use these tools reveals much about their mindset, creating a new kind of digital divide. They are the ones using AI to create new workflows and come up with new ideas for projects. Meanwhile, their colleagues might still be tentatively using it to rephrase an email or do some superficial research.

This has come about for two main reasons:

Top-down mandates are disconnected from reality. Vague executive orders like ‘Everyone must use AI’ or ‘Cut the team in half with AI’ are destined to fail. They focus on the what without providing the how, creating a vacuum of strategy that leaves employees to fend for themselves. This disconnect between executive expectations and on-the-ground reality is a primary driver of chaotic, individual adoption.

Consumer-first tools empower the individual. Unlike traditional enterprise software, generative AI tools like ChatGPT are available directly to anyone. This empowers curious individuals to become "power users" on their own, long before any official corporate strategy is in place.

That said - the challenge isn't getting everyone to adopt AI at the same speed. The goal is to establish a shared system that elevates the entire team's capability. The individual artisans must come together to become system builders (and this is where innovation usually comes from).

Here’s some ideas of how that can come about:

Hiring for Team Quotient

To build a coordinated team, you have to hire for it, a topic we've explored when asking about Your Most Important Hire for 2026. This means moving beyond interviews that test for individual brilliance and towards simulations that reveal collaborative instincts.

One of the best (and to date, unique) hiring experiences I’ve had was with a startup that gave me a business case and then temporary access to their team's Slack. The goal wasn't just to see if I could solve the problem, but to observe how I would leverage the team to do it. It was a powerful, real-world test of my collaborative process.

While giving every candidate full Slack access isn't always scalable, the principle behind it—evaluating candidates in a real-world, collaborative context—is something any team can adopt. Here are a few ways to do it:

Paired Problem-Solving: Instead of a solo take-home test, pair a candidate with a current team member for a live, 90-minute session. The goal is to observe the dynamic: Do they build on ideas? How do they navigate disagreement?

The "Playbook Contribution" Test: Give the candidate access to a simplified version of your team's "AI Playbook." Their task is to use it to solve a problem and then suggest one improvement to the playbook itself. This tests their ability to not just use a system, but to improve it for everyone.

The "Reverse Interview" on Process: Dedicate an interview slot for the candidate to grill your team on its workflows. A candidate with a high TQ will ask sharp questions about how the team communicates, shares knowledge, and resolves conflict, revealing how deeply they think about the collective ecosystem.

From Training to Workflow Reviews

Another practical change is to shift from abstract, one-size-fits-all training to concrete, collaborative "workflow reviews." Traditional AI training often fails because it's disconnected from daily work; people learn about a tool's features but struggle to apply them. A workflow review flips this model.

Once a month, the team gets together to deconstruct a core process on a whiteboard—say, building the weekly marketing performance report. They map out every step: pulling data from Analytics, cleaning it, finding insights, writing commentary, and creating slides. Then, they collectively pinpoint the friction points: "The data summary takes two hours." "Writing the first draft of the commentary is always a slog."

From there, they don't just talk about AI; they prototype solutions. The team co-writes and tests a few specific prompts to automate those friction points. The successful prompts are then codified into the team's official playbook. This method transforms AI adoption from a lonely, academic exercise into a practical, iterative team sport, focused on solving real problems together.

Creating Space for Innovation: The 20% Rule for AI

Workflow reviews are fantastic for optimizing the present, but true breakthroughs require unstructured time to explore the future. This is where companies can take a cue from one of the most famous incentives in tech history: Google's "20% Time."

By officially sanctioning time for employees to work on projects outside of their core responsibilities, Google created the space for innovations like Gmail and AdSense to emerge. We need a similar model for the AI era. Leaders should formally allocate 10-20% of their team's time for "AI Exploration." This is time dedicated to tinkering: building a custom GPT, experimenting with a new image generation model, or automating a process that isn't on any official roadmap.

This isn't a perk; it's a strategic investment. It gives employees the permission and autonomy to move from being passive users of AI to active builders of the team's future capabilities. It’s the incentive that fuels the engine of bottom-up innovation.

Measuring What Matters: Team Quotient (TQ)

Hiring for collaboration, redesigning workflows, and creating space for innovation are all critical actions. But they will ultimately fail if the underlying system of evaluation still rewards only individual heroism. This individual focus is deeply embedded in our tools. Almost all work technology from the last century—from performance review software to applicant tracking systems for filling vacancies—is designed to measure the individual. This presents a massive opportunity for a new generation of tool providers to rethink their products around team coordination and collective performance.

"Team-Native" AI Tools

The first wave of generative AI gave us powerful personal assistants. The next wave must deliver "team-native" AI—tools designed not for the individual user, but for the collective workflow.

These tools treat the team, not the individual, as the primary user. They become a form of shared cognitive infrastructure, enhancing the team's collective memory, creativity, and decision-making capabilities. This is the tangible future of collaborative work.

To make this shift stick though, we must change how we measure and define success. We need a new metric that we can coin "Team Quotient" (TQ).

While the term may be new, the principle is already a core part of how the world's most innovative companies operate. Google, for instance, spent years on its famous Project Aristotle study to discover what made its most effective teams tick. The answer wasn't the brilliance of individual members, but "psychological safety"—a shared belief that the team is safe for interpersonal risk-taking.

Similarly, Netflix's "Keeper Test" forces managers to ask a brutal question: "If this person were leaving, would I fight to keep them?" where a key part of the calculus is their contribution to the team. A brilliant jerk who drains energy and creates friction fails the test, proving that Netflix values those who "make their teammates better".

For today’s purpose, we can think of Team Quotient (TQ) as a team's demonstrated ability to use AI to amplify its collective intelligence and output. We can think of a team’s TQ on a maturity scale:

Level 1 (Ad-Hoc): Individual, siloed use. The team’s capability is the sum of its isolated parts.

What it looks like: A "bring your own AI" culture. There are no shared prompts, and usage is inconsistent across the team.

How to measure: Conduct simple employee surveys ("Do you use AI at work? Which tools?"). Observe inconsistencies in the speed and quality of similar tasks.

How to implement change: Create a dedicated Slack or Teams channel called

#ai-winsfor people to start sharing successful prompts and discoveries.

Level 2 (Coordinating): Team members share prompts and successes informally. Pockets of excellence emerge.

What it looks like: The

#ai-winschannel is active. A few "power users" are informally helping others.How to measure: Track the number of shared prompts. Identify the informal "AI champions" who are driving adoption.

How to implement change: The team lead should formalize the top 5-10 shared prompts into a simple, shared document (e.g., a Notion page). This is the V1 of your "AI Playbook."

Level 3 (Integrating): AI is formally embedded into specific, shared workflows documented in a playbook.

What it looks like: The AI Playbook is a go-to resource for specific tasks. New hires are pointed to it during onboarding. The team conducts regular workflow reviews.

How to measure: Track adoption rates of playbook prompts. Measure time saved on specific, integrated tasks (e.g., "Time to create weekly report" is down 40%).

How to implement change: Formally schedule monthly workflow reviews. Introduce "20% time" for AI exploration to find new integration opportunities.

Level 4 (Collaborating): The team actively uses AI for complex, collaborative tasks like brainstorming or strategic planning.

What it looks like: AI is present in team meetings. People use it on a shared screen to brainstorm, prototype ideas, or analyze complex data together.

How to measure: Qualitatively assess the team's innovation velocity. Count the number of new ideas or project prototypes generated via collaborative AI sessions.

How to implement change: Invest in collaborative AI tools or enterprise accounts. Set team goals that explicitly require using AI for strategic analysis.

Level 5 (Co-Creating): The team treats AI as a strategic partner, developing entirely new capabilities, services, or products that would be impossible without it.

What it looks like: The team is building proprietary AI tools, custom agents, or unique workflows that create a durable competitive advantage.

How to measure: Track revenue from new, AI-enabled services. Measure the creation of new intellectual property (e.g., custom GPTs, internal tools).

How to implement change: At this stage, the process is self-sustaining. The focus is on continued investment and protecting the team's innovative edge.

This model fundamentally shifts the focus from asking "who is our best AI user?" to the far more critical question: "how good is our team at using AI together?" The answer will likely determine which teams thrive and which become obsolete.

This maturity scale isn't just a theoretical model; it can be a practical tool for reframing performance conversations. Instead of solely focusing on individual KPIs, team performance reviews could include a quarterly self-assessment: "Where are we on the TQ scale, and what's our plan to get to the next level?" This shifts the focus of peer feedback from "was Jane helpful?" to "how did Jane's contributions to the AI Playbook elevate the entire team's output?" For team leads, their ability to advance their team's TQ level becomes a core measure of their leadership effectiveness. Ultimately, integrating TQ into performance reviews makes the invisible work of coordination visible, measurable, and—most importantly—valuable.

Final Thoughts: The Orchestrator's Dilemma

This issue surfaced in a recent webinar we hosted, titled "Should IT and HR merge?". While we talked about examples of big organizations joining those teams together, the conversation quickly evolved past organizational charts to the real heart of the matter: coordination at scale. The most pressing challenge isn't just getting individuals to sync up; it's getting teams and then entire departments to work together. This is a natural extension of the ideas we explored in The Decentralised Workforce. How does the AI-driven marketing team's playbook connect with the sales team's process? How do the insights from HR's talent analytics feed into engineering's project management?

This is where the leadership challenge of this decade comes into focus: evolving from a manager of people to an orchestrator of intelligence.

This new role is fundamentally about mastering coordination at every level—not as a tool for enforcing conformity, but as a way to create a resilient, learning organization. It's about knowing when to unleash the "superhero" on a solo mission and when to integrate their discoveries back into the team's shared playbook. It's about managing a portfolio of talent, protecting the outlier while simultaneously raising the baseline for everyone else.

Success won’t be where there will be most AI power users, just as the NFL draft wasn't won by the teams who picked the quarterbacks with the best individual stats. The ultimate competitive advantage will go to the organizations that build seamlessly coordinated processes where human creativity, strategic judgment, and empathy are fused with the speed and scale of AI. They understand that AI’s real power isn’t creating a single superhero; it’s giving the entire organization a cape.

P.S. If you're interested in the next evolution of AI coordination, we're hosting a webinar on September 10th about the rise of AI Agents and how they will transform teamwork. We'd love to see you there - to get the invite make sure you’re joining our Work3 Community!

Ciao,

Matteo

This is a fantastic paradigm to think about teams in the Intelligence Age. I could totally see a Level 6: Inception (Human teams developing new capabilities through teams of AI agents).

Great piece. Implementation requieres a very collaborative client, though!