Learning AI Feels Like a Second Job

Deep dive into why, and how to learn it without getting overwhelmed

Have you reflected on how AI is changing your life recently?

Until now, I hadn’t. I just kept chasing the news and trying to keep afloat — but I realised I had started to feel very mixed emotions.

My story in short: being a natural enthusiast, after a short (but passionate) time peeking inside the world of Blockchain and Web3, and having worked on machine learning projects for almost a decade for work, in 2022 I went all in. Evenings and weekends spent researching, teaching, testing, building — getting my hands on everything I possibly could.

Even then, I didn’t realise what I was really signing up for.

I went through every emotional phase imaginable: it’s going to change the world!, it’s going to kill us all!, it won’t change anything! — sometimes all within the same conversation.

Three years later, the initial enthusiasm has started to wade, and I’ve started to feel the fatigue.

Which seems quite in line with Gartner’s AI Hype Cycle starting to position GenAI in the ‘Trough of Disillusionment’ — a place every technological innovation has to pass before bringing clarity (englightenment) and productivity gains.

Going deeper, I thought of three main reasons for this:

The tech is great, but it’s not there yet. Yes, it improves at incredible rates, but often these things are not what you need in that moment. It takes time to give context, to prompt, to debug, to get what you want. At work, success depends on business alignment, proactive infrastructure benchmarking, and coordination between AI and business teams.

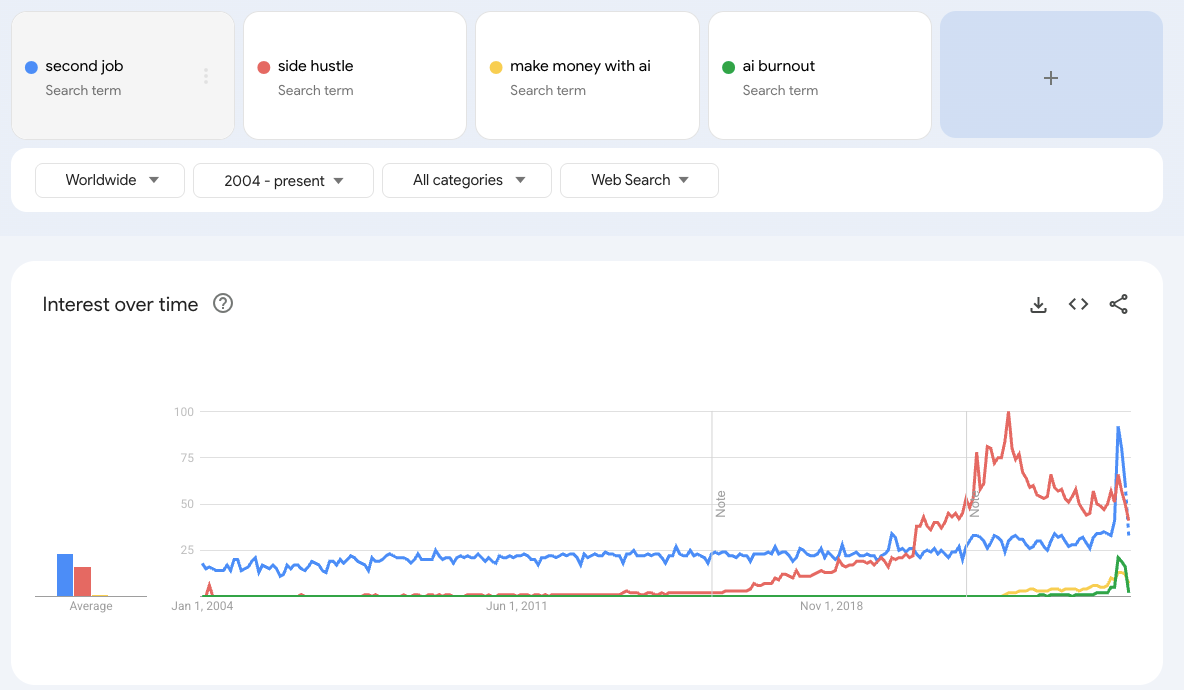

FOMO is a much bigger problem than I thought. I was too young (or careless) for the dot-com boom, but I doubt anyone felt what we feel now: the mix of capitalism, social media, and infinite content is explosive. I’ve had moments of feeling almost physically anxious opening my RSS feed, seeing another flood of new tools and news about multi-million funding for anything that moved. I’ve become a kind of digital hoarder — tabs, trials, newsletters stacked like unbuilt furniture in my task manager. Each one a small promise I don’t have time (or will) to unpack.

It changes the skills game, but is that all positive? I’ve always been frustrated about not knowing how to code. I took evening classes in Python at 35, just to run basic machine learning on my startup’s data. I wanted to make videos. Now, suddenly, I can. And yet, I often feel like a kid in a candy store: everything within reach, yet strangely unsatisfying. There’s a point where abundance becomes anxiety; where every new thing I could learn feels like another reminder of what I haven’t.

All of it boils down to this realisation: AI feels like a second job.

It seems like I’m not alone: according to data from LinkedIn, 51% of professionals say that learning AI feels like a second job, and 41% say the pace of change is affecting their well-being. And if I had to guess, an even higher share of students and graduates would fall into that category (we wrote about this in AI Is Killing Graduate Jobs).

What’s strange is that it doesn’t just feel like extra work — in many ways, it simply is.

The hidden costs of learning AI

What feels personal at first — exhaustion, distraction, guilt — might actually be structural. The friction is built into how we’re learning. Here are a few reasons why:

Drinking from a Firehose: I signed up to half a dozen (at least) of newsletters, and even though they mostly cover the same topics, I still try to read them. Then it’s my LinkedIn feed, full of points of view and charts (again, trap I fall into myself, I admit) and Instagram creators saying how their workflow changed the world or made them rich easily. It’s hard to drink from this, it’s hard to think things through before the next one comes in.

Context switching: the first and simplest reason AI feels like a second job isn’t really time — it’s attention. We’re drawn, almost hypnotically, by the promise of talking to a machine in our own language. But every time we “converse” with it, we shift mental gears — from intent to prompt, from human problem to machine syntax — and each shift drains a little focus.

We wrote about this in Cognitive Debt: every time we change environments, interfaces, or mental models, we lose energy. AI amplifies that effect, asking us to juggle multiple layers of thinking at once — framing, translating, interpreting, reframing. Even when AI saves us time, it quietly taxes our attention. We’re not just learning new tools; we’re learning how to think with them.

Emotional debt: each new tool brings its own kind of guilt — the guilt of not exploring enough, of falling behind, of not knowing what others seem fluent in. Curiosity turns into compulsion.

The story says AI will make us more “strategic,” taking away repetitive tasks so we can focus on higher-value work. But is that really happening? Not yet, and the mental load hasn’t gone away.

A Danish study of 25 000 workers found widespread chatbot use but almost no change in hours or wages. AI saved 2.8 % of work time but created new tasks for 8.4 % — cancelling out the gains. What that means in practice is simple: we’re filling saved hours with invisible work. We’re not resting; we’re reformatting decks, re-prompting outputs, chasing the next fix of optimisation.

The 100-careers Life: Andy often talks about this — where a career isn’t one long skill arc, but a constant loop of learning, applying, obsoleting, and relearning. AI accelerates that loop. One week you’re a prompt engineer, the next a data wrangler, the next a video producer. Every update spawns another micro-role to play.

The Over-Employed era: meanwhile, some are quietly juggling multiple jobs, multiple selves, powered by the same tools meant to simplify life (check out r/Overemployed with 300k visitors/week). The new worker isn’t one person doing one job well, but one person doing many jobs just enough.

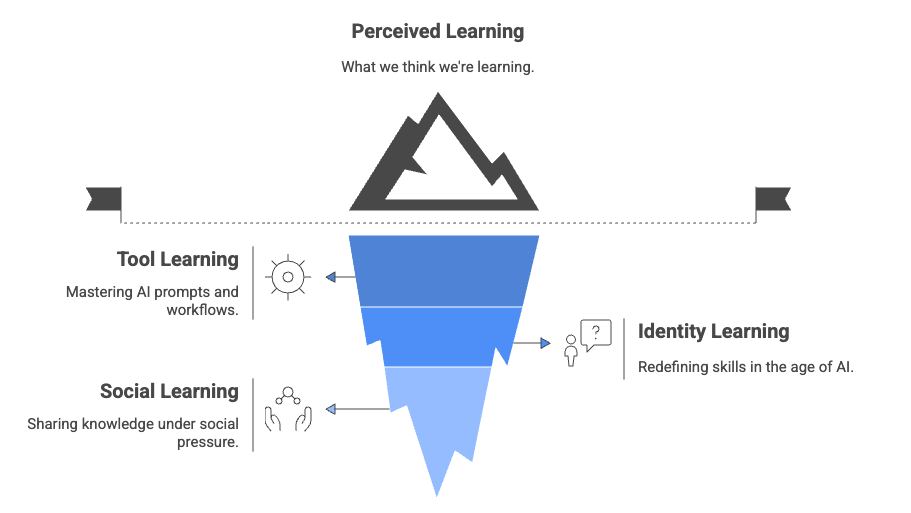

The more I talk about this with people, the clearer it becomes that “learning AI” isn’t really one thing at all, but three layers happening at once. On the surface there’s tool learning — prompts, workflows, automation chains — the part the tutorials and how-to threads are about. It looks like the hard part, but it’s rarely where the real exhaustion comes from. Beneath that is identity learning: the quiet negotiation with yourself about who you are when the skills that once defined you become fluid. It’s the unsettling question of what remains “yours” when the boundary between thinking and tooling blurs. And beneath even that sits social learning: the fact that we are not learning in private but in public, in feeds and meetings and performance reviews, constantly performing adaptability to prove we’re keeping up. The exhaustion isn’t from the prompts or the tools — it comes from maintaining a sense of self under visibility and change. In that sense, most of the work of learning AI isn’t cognitive at all — it’s identity work.

As we explored in Agent Skills – The New Currency of Work, this constant reinvention comes at a cost. AI hasn’t given us more time; it’s given us more selves to maintain.

And when identity fragments, burnout isn’t a glitch.

Debunking 4 AI Traps to frame the problem

I mentioned having a solution — we will get to it in a second, but I can spoiler on how it’s not technological, it’s 100% about human culture and communication.

That’s why I’d like to start by debunking 5 myths about AI that are causing such a pain in the process of using it and learning it.

1. Speed trap: there’s another layer to this — AI doesn’t just change what we do; it changes how fast we think we should do it.

The first time I saw ChatGPT write a paragraph in three seconds, I felt equal parts awe and anxiety. There it was, an instant creation, and yet a small voice whispered: “Why does it take you so long?”

Generative AI is teaching us to expect speed from ourselves. In the same way social media trained us to consume faster, AI is training us to produce faster. When a tool can generate five drafts in seconds, every human pause feels inefficient.

2. Strategy trap: We adopt AI to “save time,” but often end up spending that saved time questioning why we’re not faster. The work AI creates like reviewing, fixing tone, re-contextualising, can consume as much time as it frees. And that’s the paradox: the more we try to use it well, the more it pulls us deeper into itself.

This isn’t just technological. It’s cultural. We’ve been conditioned to believe that speed is virtue — that if something can be done faster, it should be. Generative AI turns that reflex inward: we’re no longer competing with others; we’re competing with our own tools. And that is dangerous, because creativity, empathy, and meaning still take time.

The strategic myth says that AI will automate the “busywork,” leaving us to do only the creative or strategic tasks. But strategy doesn’t come from constant acceleration, it comes from context, boredom, repetition, wandering attention. Our best ideas appear in the gaps: the shower, the walk, the quiet in-between. AI, by design, never pauses. But we have to.

The pauses aren’t inefficiencies, they’re our core infrastructure. They’re where intuition settles and meaning forms. If we design a future where we eliminate all slow or “low-value” work, we may end up eliminating the conditions that make strategic work possible at all.

3. Learning in public: I recently came across a viral post on Reddit’s r/WomenInTech titled “I’m tired of the constant learning in tech, and I’m tired of pretending I’m not.”

Dozens of comments describe the invisible pressure to stay relevant — people who love technology but feel like it’s consuming every spare moment of their lives. A user wrote, “I used to love learning new things. Now it just feels like survival.” Another confessed, “Every time I take a weekend off, I come back feeling behind.”

I recognise this pressure myself. Posting on LinkedIn has become part of my creative process, but I sometimes catch myself sharing just to stay visible — even when I don’t feel I have anything original to add. That quiet compulsion to say something is a symptom of the same cultural loop: if you’re not posting, you’re falling behind.

The truth is that we don’t need to learn in public. We need to learn together, in our own pace and in our own way. Otherwise, we’re just gobbling information, not absorbing it.

4. Mandated curiosity: the irony of all this is that just as many of us are reaching our cognitive limits, the institutions we work for are beginning to formalise AI learning. But are they really?

Across industries, new programmes appear called “AI readiness” checklists, dashboards showing which teams are using it most. Some companies now track “AI tool adoption” on dashboards, like sales KPIs. I’ve seen internal checklists that ask, Which AI tools did you use this week? as if curiosity itself were a performance metric. The message, even if unintended, is clear: learning is no longer optional - and it has practical consequences.

From LinkedIn’s research, a whopping 45% of employees feel they have to know how to use AI at work to get promoted or land a new job, which also matches over one-third of U.S. execs saying that they plan to incorporate employees’ ability to effectively use AI into performance reviews or hiring criteria in the year ahead.

There is no arguement about needing to stay up to date and about the need to learn how and when to use this technology. But until they are structured and thoughtful, these mandates risk having just one outcome: workslop. This is a brand new term coined to describe the busywork that emerges from poorly implemented AI processes and sits right at this intersection.

It’s beginning to be everywhere: teams doing work about AI instead of work with it. Decks, audits, reports, demos. Artefacts of learning instead of learning itself.

How to Learn AI without losing yourself

Now, let’s shine a little light at the end of the tunnel — shall we?

There isn’t one-size-fits-all, but these are a great place to start:

1. Audit your AI-Learning Diet: You don’t need to know how AI works, you don’t need to try everything out there. You need to know where it actually can help you, where you are most interested in. What are your objectives? Is it learning a new skill? Filter the rest out (funny — AI can help on this, by sifting through). Think of it like going on an information diet. Remove bottom 20-30% of low-value content (junk prompts, shallow how-tos) and replace with high-signal (deep skill frameworks, peer roundtables, identity-work). Build space for reflection: weekly journal/peer conversation on “what does this AI tool change for me as a professional?”

2. Stick with fewer things for longer: every week there’s something new, but mastery still hides in repetition. Progress doesn’t come from testing every app; it comes from finding one that fits the shape of my work and learning it deeply. This requires discipline, but it does tie back again to your goals and how you are measuring against those. Choose that one project and obsess and focus on that only.

3. Shared uncertainty is generative: no one really knows what they’re doing — and that’s the point. Shared uncertainty is what lets real learning happen. When no one’s pretending to have the answers, the room gets lighter. Stop only being at the window, participate in a Reddit community, a LinkedIn group, a cohort. In fact, LinkedIn’s research reports that networks are providing help: 49% in the U.S. say they have people they can turn to in their network for advice on using AI at work.

4. Keep a Personal Knowledge Management system: Notion, Obsidian, paper — choose your weapon, but start thinking about putting all the relevant information in a place that is yours, where you can make things your own. We only learn what we use, but also what we digest and get down in our way. This will force you to reduce the amount of things you are consuming.

The bottom line is that sometimes it’s better to leave a rough edge — that’s how you remember you made it yourself.

Final Thought: we learn better together

One last thought — maybe the real problem isn’t that AI feels like a second job, but that we’ve been trying to learn it in the same solitary way we once learned everything else on the Internet: late at night, or at work in-between calls, surrounded by tabs. For a while, that worked. The web rewarded independence; it made us believe that if we were curious enough, we could teach ourselves anything. But this time, the pace is different. The material moves faster than any one of us can absorb, and the more we try to keep up alone, the more fragmented we become.

Learning gets easier when you stop learning alone: sharing small experiments is worth more than any course. The future of AI learning won’t look like solo mastery; it’ll look like networked fluency (something we discussed in “There's no AI in Team”).

Reclaiming the social side of learning: so, maybe the only sustainable way through this is together — not through another webinar or mandatory training, but through a shared rhythm of exploration. When learning becomes collective, curiosity replaces pressure. The question shifts from “How do I stay relevant?” to “What can we explore together?”

Creating permission, not pressure: the best workplaces don’t push people to “use AI more.” They create space to be uncertain, to experiment, to pause. In that permission, learning becomes lighter, not another unpaid shift after work, but a conversation that’s still unfolding. Remember Google’s 20% of experimentation time?

Common language: this doesn’t require grand programmes. It can start small — half an hour to share prompts that actually worked, a thread where people post what helped rather than what looked impressive. Over time, those small gestures build a shared vocabulary that lets people admit what they don’t know without fear of looking slow.

Coherence over speed: when teams learn together, progress compounds not through efficiency, but through coherence. The work starts to make sense again, not because it’s faster, but because everyone is learning in the same direction.

Shared tempo: perhaps the real opportunity isn’t to become more productive, or even more “AI-ready,” but to recover a human rhythm — one that balances ambition with patience. If we can do that, AI will stop feeling like an extra job and start feeling like a shared craft, something alive, collaborative, still imperfect, but ours.

Perhaps this is what maturity in technology feels like, not excitement or fear, but the slow work of integrating it into ordinary life.

Last, but not least, we need to bring fear out of the equation — something that can only be done by removing unwanted sugars (media) from our collective diet, and by planning the right meal inside organizations. This is a responsibility owned by HR, which must step up to the challenge and design a cohesive, clear program — not only in Learning & Development, but also in communication, to silence toxic narratives and build confidence. After all, HR is the team that in the report appears most optimistic about AI’s potential, while Marketing says it’s already making them more efficient. Maybe it’s time they sat at the same table — one brings the appetite, the other the recipe?

The bottom line: AI learning isn’t about more data, it’s about better alignment — choosing the kind of knowledge that supports who you’re becoming.

I was struck by this stat from the LinkedIn report: 62% of professionals globally say they’re optimistic about AI improving their daily work life. Maybe that’s the real cue — not to ask whether we’ll adapt, but how we’ll do it together — turning an imperfect tool into a collective practice.

![[Image Alt Text for SEO] [Image Alt Text for SEO]](https://substackcdn.com/image/fetch/$s_!M-T-!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F2bbbae7d-8995-49b8-b202-b6400e3ca604_985x586.jpeg)