The AI Mirror: What Using AI Reveals About Your Leadership

Forget coding. The most valuable skill in the age of AI is management - and if you can't lead people, you won't be able to lead technology.

“I’ll be your mirror / Reflect what you are, in case you don’t know.”

The Velvet Underground & Nico (1967)

Welcome to this week’s edition!

The headlines are overwhelming. AI is coming for jobs, creating a new class of hyper-productive workers. But this intense focus on AI as a personal productivity tool—now used by nearly 75% of office workers—misses the biggest shift of all.

The very structure of management and career progression is being reinvented. (Something we wrote about also in AI and the Corporate Pyramid)

We have been thinking about AI as a tool, like a better search engine. This is a profound misunderstanding.

The generative AI we're integrating into our workflows today isn't just a tool; it's the ultimate mirror in the form of a cognitive extension. It's a direct amplifier for human thought.

Used well, it sharpens our thinking. Used passively, it can lead us into a state of 'cognitive debt'. The quality of its output is a perfect reflection of the clarity of our instructions, the depth of our business context, and the very structure of our thinking.

How that extension of the mind is managed will determine new career trajectories.

The age of AI won't just reward those who can use it; it will disproportionately reward those who have mastered management, communication, and strategic thinking.

Let's dive in!

Managing Intelligence: The Prompt as a Performance Review

Remember the last time a project went sideways because of a simple miscommunication? This isn't a rare occurrence.

Studies show that executives spend an average of 23 hours per week in meetings, with 71% of senior managers finding them unproductive. This failure to translate intent into clear instructions is a massive bottleneck. According to the Project Management Institute, inaccurate requirements gathering is a primary reason for project failure 38% of the time.

This is a timeless problem. AI doesn't magically read a customer's mind, but it dramatically accelerates the feedback loop. A manager can now use AI to prototype an idea based on an initial requirement, show it to the customer, and iterate in hours instead of weeks. AI helps clarify what the customer wants by making the process of "showing, not just telling" almost instantaneous.

This process starts with the prompt, which acts as a mirror for our own clarity. Think of it like brewing beer. A brewmaster’s expertise is in the initial ingredients. Once the fermentation begins, the process is largely set. The quality of the beer was determined at the start. It's the same with AI. The most high-leverage work is getting the initial prompt right. A fuzzy prompt yields a fuzzy result.

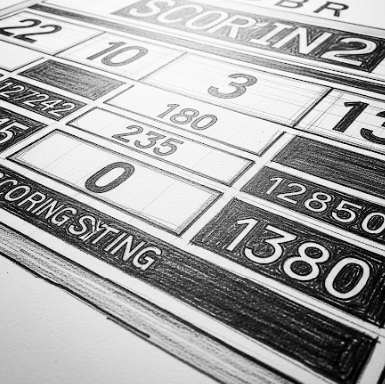

This is where management skills are tested. A good project brief requires clear objectives, defined constraints, and rich context—the very anatomy of a sophisticated AI prompt. Good projects have always started with refining the core questions. The difference now is that AI makes the quality of those questions quantifiable for the first time. We can imagine a "Prompt Quality Score" becoming a standard metric in performance reviews, making the intangible skill of 'clear thinking' a tangible, measurable competency.

The Conductor's Baton: Orchestrating the Human-AI Team

This need for clarity is magnified with the rise of AI 'agents'—automated workflows designed to execute multi-step tasks. As these agents become more capable, a leader's value shifts from task execution to process architecture. The manager's new role is Cognitive Triage. This isn't just about project efficiency; it's about managing the team's collective cognitive health. A leader must expertly decide which tasks to delegate to AI and which require human nuance, not just to ensure quality, but to strategically prevent the team's core problem-solving muscles from atrophying.

Imagine a product manager planning a new feature. The Cognitive Triage model looks different from the old way of working:

Task 1 (AI): 'Analyze the last 500 user feedback tickets tagged with 'dashboard'. Summarize the top 5 most requested improvements.'

Task 2 (Human - UX Designer): 'Based on this AI summary, conduct three 30-minute interviews with power users to understand the 'why' behind these requests.'

Task 3 (AI): 'Generate five low-fidelity wireframe concepts for a dashboard that addresses the top theme from the user interviews.'

Task 4 (Human - Team): 'As a team, let's review these five concepts and decide which one best balances user needs with our technical constraints.'

In this model, the manager designs a workflow that uses AI for scale and humans for wisdom. This is crucial for managing diverse teams of bots, freelancers, and employees effectively (discussed in AI Productivity vs Performance)

As agents become truly autonomous, the manager's role will evolve again from Conductor to Portfolio Manager of Intelligent Assets. The job will be less about writing prompts and more about setting goals, defining ethical guardrails, and allocating budgets to a team of autonomous agents.

But can AI be trained to do what a good manager does?

It can certainly handle managerial tasks—scheduling, resource allocation, data analysis. But it cannot replicate the art of leadership: providing empathetic feedback, fostering psychological safety, or making a strategic judgment call that goes against the data.

AI can run the management science, but a human must still practice the leadership art.

The Manager as a Human API: Routing Knowledge

Not everyone on a team will adopt AI at the same pace. This creates a new, critical function for the manager: to act as a Human API. In technology, an API (Application Programming Interface) is a messenger that allows different software systems to communicate. In a team, the manager plays a similar role, acting as a networker or resource investigator. They must:

Listen for 'calls': Identify business problems or bottlenecks.

Query the 'database': Know which team member has the relevant AI skill.

Return the 'response': Make the connection, facilitating collaboration.

This transforms the manager from a supervisor into a strategic knowledge broker. But this doesn't happen by accident. It requires formalizing the role by creating an internal 'prompt library' or building 'AI consultation' into performance reviews. The goal is to turn individual expertise into a scalable organizational asset, a process that can be guided by a dedicated AI Ops Lead.

Building a 'Prompt-First' Culture

The skills of a few good managers can only go so far. The real transformation happens when an entire organization creates a "prompt-first" culture. This is a culture where clarity and precision become the default operational mode.

In a prompt-first culture:

Project kick-offs begin with a "Master Prompt Document" that defines the problem space, constraints, and success criteria.

Team communication shifts from "Can someone look into this?" to "Here is a starter prompt to analyze the Q3 sales dip. Please refine it."

Cross-functional handoffs become seamless because the "what" and "why" are explicitly defined.

This culture of precision is the organizational-level defense against wasted cycles. From this foundation, we can move to measuring its impact.

The ROI of Clarity: When Your Thoughts Have a Price Tag

Here’s where the abstract becomes brutally concrete. For the first time, we can attach a direct, quantifiable cost to a leader's lack of clarity. A leader who gets the desired result in three precise prompts has a demonstrably higher ROI than one who meanders through fifteen. This isn't just about saving money; it's a direct measure of project velocity.

This creates a new, immutable ‘Proof of Work’ for managerial effectiveness. We can even imagine a Prompt Quality Score (PQS) being developed, calculated with a formula like: PQS = (Contextual Richness x Specificity) / Number of Iterations. A high score indicates a leader can get to a high-quality output with minimal rework. By tagging API calls with user and project IDs, a CFO can see the literal 'cost-per-insight' for different teams.

However, this hyper-quantification will inevitably create a new kind of AI-Induced Performance Anxiety. The manager's role must evolve to include coaching employees through this pressure, fostering the psychological safety required for experimentation in an environment of radical transparency.

From Labor Arbitrage to Cognitive Arbitrage

The first wave of AI adoption is about efficiency—using AI to do old tasks faster and cheaper. This is labor arbitrage.

The real, sustainable advantage will come from the second wave: cognitive arbitrage.

This is the art of using AI not just to answer known questions faster, but to ask entirely new questions and generate novel insights. The value isn't in summarizing 1,000 reviews 90% faster. It's in finding the one contradictory sentence across all 1,000 reviews that reveals a flaw in your product or an untapped market opportunity. This requires a leadership style that encourages curiosity and rewards intelligent failure.

The Autopilot Trap: The Danger of Managerial Abdication

Of course, this new paradigm comes with significant risks. The greatest danger is not that AI will get things wrong, but that managers will blindly accept its outputs.

This is the "Autopilot Trap," and its primary consequence is the accumulation of cognitive debt. It's the Black Mirror version of the future of work, where convenience leads to unforeseen consequences.

Skill Atrophy & Cognitive Debt: If you only ever ask for the answer, you risk losing the ability to find it yourself. A recent MIT study, "Your Brain on ChatGPT," found that users who relied heavily on the AI showed weaker neural connectivity and poorer memory recall. The researchers termed this "cognitive debt"—deferring mental effort in the short term for long-term costs like diminished critical thinking. This is reflected in other research showing that while AI boosts productivity, 77% of employees report it has also increased their workload, creating a feeling of being "buried by bots." (we wrote about this in Buried by Bots)

Amplified Bias: An unexamined bias in a manager's prompt can be laundered through the AI's complex systems and returned as a seemingly objective, data-driven recommendation.

Accountability Black Box: When an AI-suggested strategy fails, who is accountable? A poor manager can easily blame the "black box," diffusing their responsibility.

Final Thoughts: What the Mirror Shows Us

So, what does the AI mirror show us today?

It reflects our own clarity, our biases, and our strategic abilities with unflinching accuracy.

The fundamental shift is clear: AI isn’t replacing managers; it's exposing them.

It rewards clarity and strategy while penalising vagueness and a lack of critical engagement. The World Economic Forum now estimates that 40% of the workforce will need reskilling due to AI, and it's these uniquely human, managerial skills that are in highest demand.

For Workers: The most crucial professional development is not mastering a specific AI tool, but mastering the timeless skills of structured thinking to protect your own cognitive capital. This doesn't mean leaders can be technically illiterate. On the contrary, it requires a sufficient and strategic technical literacy—not the ability to code, but the ability to understand the fundamental strengths and weaknesses of the models being used.

For Employers: The challenge is to prepare better managers for this new context. Stop asking candidates if they have "ChatGPT experience." Instead, design interview tasks that test for the underlying competencies. Give them a complex problem and a powerful AI. Watch how they deconstruct the problem, instruct the AI, and critique the output. This is the new, real-world work sample for identifying leaders who can thrive, not just survive, in an age of intelligent machines.

Until next week!

Matteo